It feels the same…

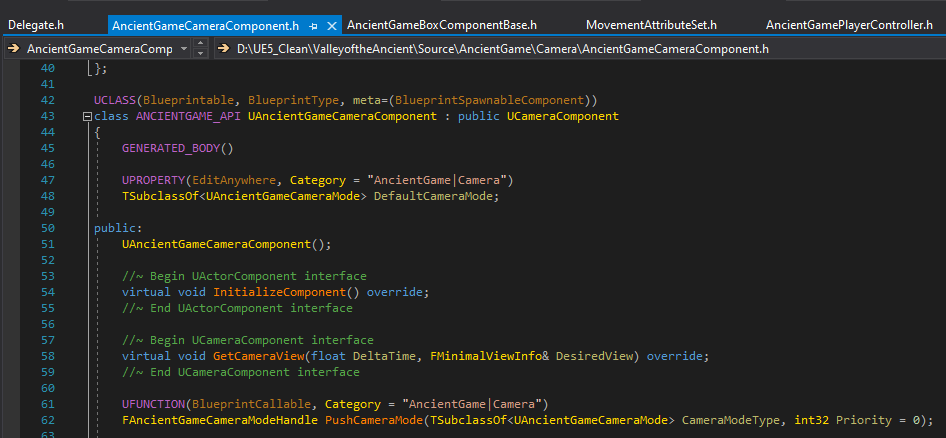

And I don’t say that as a bad thing necessarily, although it’s admittedly a bit of a mixed bag. UE5 is very obviously a major revision of UE4, and not a new engine. The code base is largely the same. The class structure is largely the same. The C++ macro hell is largely the same. When they say that your UE4 projects can move forward into UE5, this is why. What you’re gaining is replacements to core systems that your game isn’t directly interfacing with, so you drop your existing code in and it more or less just works.

Because of that, the ability for developers to jump from UE4 to UE5 is going to be a much lower barrier than UE3 to UE4 was. They’ll be spending more time learning what’s purely new instead of what’s changed. They won’t be figuring out what the appropriate level of BP vs. native is because that knowledge is already built-in to their studio workflow. They’ll just drop in and go.

However, in its current state that also means that there’s a lot of fluff that feels like it could have been cut. Chaos Physics is allegedly going to be the first-class citizen here, but it’s still marked as beta and the PhysX source is still there. The Paper2D plugin is still there and enabled, despite the fact that it never really reached a level that made it attractive relative to Unity for sprite-based projects. This feels like a point where they can cut things they haven’t been supporting, even if that breaks some of that transition plan, OR pick this as the point where they can really invest in bringing up some of those less supported features into first-class support territory.

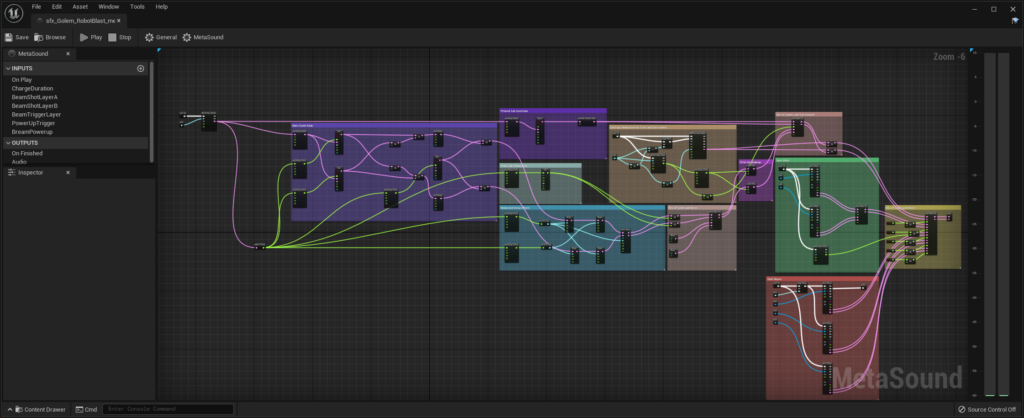

This also means that I’m inherently suspicious of them pushing things like audio into Blueprint. One of the biggest single performance issues in the last 5 years that I’ve continued to work around is Blueprint. Yes, it’s extremely useful as a tool set, and yes it allows designers to prototype things in a good fashion, but every UE4 project has at some time hit a reckoning point where development has to stop to hand nativize Blueprints to get performance back and generalize functionality. The tendency over time has been that the teams I’ve worked on have used BP less because people end up spending more time working on debugging and rewriting BP than they would have spent if programmers had just written the stuff in native in the first place. The tools in place like the automated BP nativizer just haven’t worked consistently enough for me to feel confident in their sustained use. Without an army of people to then be available to convert prototypes to native as they come online, it’s typically just been safer for the teams I’ve been on to limit its use from the start.

Moving other systems into BP is one of those things where I immediately become suspicious. Admittedly, part of this is a built-in suspicion of Unreal sound in general. Most projects I’ve worked on since Rocket League have at some point moved their audio solution off to Wwise instead of using Unreal’s base solution. However, I’m curious about what the performance cost on the CPU will be to be doing large audio graphs in BP, or a BP-type asset. Taking a peak around, they’ve got some dynamic elements to modify the sounds at runtime, but in a repeatable fashion. If it’s got a high CPU overhead just to process what should be going on, I’m not sure that I can make a good case for not collapsing this down to a single wave file once the audio folks are happy with the result they’ve gotten. In cases where things are truly dynamic, this could give some interesting potential, but given the past history I’ve had dealing with BP-related performance problems I’m expecting to have to limit its use to high-value experiences.